Configuring the Robots.txt File in Magento 2

Configuring the Robots.txt File in Magento 2

Setting up the robots.txt file in Magento 2 helps control which parts of your site search engines can access and index. A well-configured robots.txt can improve SEO by preventing search engines from crawling duplicate or irrelevant pages, while focusing their attention on your priority content.

In Magento 2, configuring robots.txt is straightforward and accessible directly from the admin panel. Follow these steps to set up your file efficiently:

Steps to Configure Robots.txt in Magento 2

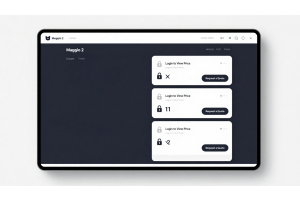

- Access Admin Panel: Go to the Magento 2 Admin panel. From there, navigate to

Stores > Configuration > General > Design > Search Engine Robots. - Edit Instructions for Robots.txt: In the

Search Engine Robotssection, you’ll find options to specify custom instructions for search engines. Adjust theDefault Robotsfield to choose whether to allow or restrict indexing across your site. - Customize Directives: For more control, you can add specific directives under

Edit custom instruction of robots.txt File. Here, you can include commands to disallow certain paths, like/checkout/or/customer/, which you might want to keep private. - Save Configuration: After adding the necessary directives, save your configuration and clear the cache to apply changes. This ensures search engines receive the updated

robots.txtfile when they next crawl your site.

| Command | Purpose |

|---|---|

| Disallow | Prevents search engines from crawling a page. |

| Allow | Grants access to specific sections, if needed. |

| Crawl-delay | Sets a delay between page crawls (for performance). |

By managing your robots.txt file, you can ensure search engines focus on your most important pages, enhancing your site's SEO while preserving resources.

Table Of Content

Setting Up the Robots.txt File in Magento 2 for SEO Optimization

Configuring the robots.txt file is a critical step for Magento 2 store SEO. This file helps you control which pages search engines can and cannot index, ensuring that unnecessary internal pages aren't visible in search results.

Magento 2 includes built-in settings for managing robots.txt, making it easy to give search engine bots clear instructions. Located in the root directory of your Magento 2 installation, the robots.txt file allows you to customize directives, whether you use Magento's default settings or set up your own custom instructions.

Below, I'll guide you through configuring the robots.txt file in Magento 2, assuming your store is already set up and running.

Steps to Configure the Robots.txt File in Magento 2

| Step | Description |

|---|---|

| Access Robots.txt Settings | Go to Content > Configuration in the Magento 2 Admin panel and select the store view to edit. |

| Update Instructions | Under Search Engine Robots, adjust the Default Robots settings for indexing preferences. |

| Save and Verify | Save your changes, then verify that robots.txt is updated correctly in the site’s root directory. |

This configuration ensures that bots can crawl only what’s necessary, boosting SEO while protecting sensitive site data from unnecessary indexing.

How to Set Up the Robots.txt File in Magento 2

To configure your Magento 2 robots.txt file effectively, follow these steps to guide search engine bots on indexing preferences for your site. Start by logging into the Magento 2 Admin Panel.

Step 1: Access the Design Configuration

- Go to

Content > Design > Configurationin the Admin panel. - Select the

Global Design Configurationfrom the list to edit.

Step 2: Modify Search Engine Robots Settings

- Locate and expand the

Search Engine Robots section. - Here’s how to set the Default Robots option:

INDEX, FOLLOW: Allows search engines to index all pages and revisit for updates.NOINDEX, FOLLOW:Blocks indexing but enables search engines to revisit the site.INDEX, NOFOLLOW:Indexes content but prevents further crawling for updates.NOINDEX, NOFOLLOW:Completely blocks indexing and revisits.

Step 3: Customize Robots.txt Instructions

In Edit Custom Instruction of Robots.txt File, add any specific directives. For instance, during development, you can disallow all pages to prevent early indexing.

Reset Option: Select Reset to Default if you need to revert to Magento’s default robots.txt instructions.

Step 4: Save Your Configuration

After setting your preferences, click Save Configuration to apply the changes.

This setup ensures that search engines index only relevant parts of your Magento 2 store, improving SEO while securing essential areas.

Setting Up Custom Directives in Magento 2 Robots.txt

In Magento 2, you can manage search engine access to different parts of your site by customizing the robots.txt file. This file is essential for SEO because it tells search engines which parts of your site to index and which to avoid. Here’s how to set up useful custom instructions for better control over indexing in Magento 2.

Key Robots.txt “Disallow” Directives for Magento 2

Use the following “Disallow” rules in your Magento 2 robots.txt to block indexing of areas that don’t need to be public or contribute to duplicate content issues.

1. Full Access Permission

Allowing full access enables search engines to index all pages of the site, ideal when you want maximum visibility for all content and have already implemented effective SEO. This directive is useful if you don’t handle sensitive customer information on publicly accessible pages or if you’re managing an informational website.

Full Access Permission

User-agent: *

Disallow:

Allows full access to all site content. Use for informational sites that require broad indexing.

2. Restrict All Folders

Blocking all folders in robots.txt completely prevents search engines from crawling the site. This can be helpful during development stages or when the site isn’t ready for public viewing. However, this approach restricts visibility, so use it sparingly.

Restrict All Folders

User-agent: *

Disallow: /

Blocks all site content from indexing. Ideal for development or staging environments.

3. Default Magento Directives

Magento provides default directories that aren’t meant for public access. Blocking these paths protects the security and performance of the website by hiding sensitive files from crawlers. Directories like /lib/ and /var/ store core files and customer information, which shouldn’t be indexed.

Default Magento Directives

Disallow: /lib/

Disallow: /*.php$

Disallow: /pkginfo/

Disallow: /report/

Disallow: /var/

Disallow: /catalog/

Disallow: /customer/

Disallow: /sendfriend/

Disallow: /review/

Disallow: /*SID=

Protects sensitive directories from indexing.

4. Block User Account & Checkout Pages

Blocking access to checkout and account pages protects private information and prevents search engines from indexing non-relevant pages, keeping the focus on products and content that matter to customers.

Block User Account & Checkout Pages

Secures private account and checkout areas:

Disallow: /checkout/

Disallow: /onestepcheckout/

Disallow: /customer/

Disallow: /customer/account/

Disallow: /customer/account/login/

5. Exclude Catalog Search Pages

Catalog search pages are dynamically generated and often duplicate content across different queries. Blocking these pages helps prevent content duplication, which can negatively affect SEO.

Exclude Catalog Search Pages

Prevent crawlers from accessing catalog search pages:

Disallow: /catalogsearch/

Disallow: /catalog/product_compare/

Disallow: /catalog/category/view/

Disallow: /catalog/product/view/

6. Block URL Filters for Clean Indexing

URL filters can create multiple versions of the same page, leading to duplicate content issues. This directive helps maintain clean URLs, making it easier for search engines to crawl without redundancy.

Block URL Filters for Clean Indexing

Avoid duplicate content by blocking URL filter parameters:

Disallow: /*?dir*

Disallow: /*?dir=desc

Disallow: /*?dir=asc

Disallow: /*?limit=all

Disallow: /*?mode*

7. Restrict CMS and System Folders

Magento’s CMS and core system files are essential for functionality but are irrelevant to users. Blocking these paths hides core files and prevents search engines from indexing sensitive or irrelevant technical content.

Restrict CMS and System Folders

Block core directories from being indexed:

Disallow: /app/

Disallow: /bin/

Disallow: /dev/

Disallow: /lib/

Disallow: /phpserver/

Disallow: /pub/

8. Avoid Duplicate Content with Tags and Reviews

Tag and review pages often duplicate product information or display repetitive content. Blocking these can help avoid content duplication penalties and prioritize actual product and category pages in search engine results.

Avoid Duplicate Content with Tags and Reviews

Prevent duplicate content by blocking tags and reviews:

Disallow: /tag/

Disallow: /review/

Common Robots.txt Directives for Magento 2

| Directive | Function | Use Case |

|---|---|---|

| Full Access Permission | Allow search engines to crawl all pages | Informational or content-rich sites |

| Restrict All Folders | Block all site access | Staging or development sites |

| Default Magento Directives | Block core system files | Secure sensitive areas of Magento stores |

| User Account & Checkout | Restrict private customer areas | Hide checkout and account pages in SEO |

| Exclude Catalog Search | Prevent duplicate content in search pages | Catalog-heavy e-commerce sites |

| Block URL Filters | Minimize URL parameter indexing | Filter-heavy Magento navigation |

| Restrict CMS/System Folders | Prevent indexing of system directories | Keep CMS backend hidden from SEO |

| Duplicate Content - Tags/Reviews | Prevent duplicate tag and review pages | Focus on main product and category pages |

Tip

To enhance your eCommerce store’s performance with Magento, focus on optimizing site speed by utilizing Emmo themes and extensions. These tools are designed for efficiency, ensuring your website loads quickly and provides a smooth user experience. Start leveraging Emmo's powerful solutions today to boost customer satisfaction and drive sales!

Optimize Crawl Budget by Blocking Low-Value Pages

Crawl budget optimization helps search engines prioritize high-value pages on your Magento site. Blocking access to low-value or irrelevant pages, such as search results or specific parameters, ensures that crawlers focus on indexing your most important content, enhancing SEO effectiveness.

Example Commands to Add:

Disallow: /search/

Disallow: /*?q=

Disallow: /*&sort=

Explanation:

Disallow: /search/- Blocks site-wide search pages that don’t offer unique content value.Disallow: /*?q=- Prevents indexing of specific search queries to avoid duplicate content.Disallow: /*&sort=- Blocks unnecessary URL parameters, reducing duplicate content and focusing the crawl budget on product and category pages instead.

Adding these directives can help improve SEO by allowing search engines to crawl and index more relevant pages, which can lead to a more effective search engine presence. Regularly review and update your robots.txt file to align with changes in site structure and SEO goals.

Conclusion

In summary, configuring the robots.txt file in Magento 2 is a crucial step for optimizing SEO by managing what web crawlers can access and index on your site. By customizing the file to restrict access to sensitive pages, filter parameters, and low-value content, you can ensure search engines prioritize your most relevant and valuable pages.

Additionally, blocking internal, search, and filter-based URLs improves your crawl budget, enabling search engines to focus on product and category pages that enhance user experience and SEO impact. Regularly reviewing your robots.txt file as your site grows helps keep your SEO strategy aligned with current goals, ensuring a well-managed, optimized crawl process for better visibility and performance.

FAQs

What is the purpose of the robots.txt file in Magento 2?

The robots.txt file in Magento 2 directs web crawlers on which pages or sections of your site to index and which to ignore. This helps optimize SEO by preventing unnecessary or sensitive pages from being listed in search engine results.

How do you access the robots.txt file settings in Magento 2?

In Magento 2, you can access robots.txt settings by logging into the Admin Panel, navigating to Content > Design > Configuration, and then selecting Global Design Configuration to update the Search Engine Robots section.

What does setting “INDEX, FOLLOW” in robots.txt mean?

“INDEX, FOLLOW” instructs search engines to index the page and follow any links on it. This is ideal for content you want to be fully visible to users and indexed by search engines.

When should you use “NOINDEX, NOFOLLOW” in robots.txt?

“NOINDEX, NOFOLLOW” is used when you want to hide specific pages entirely from search engines and avoid following links on those pages. It’s typically used for admin, checkout, and user account pages.

Can you add custom instructions to the Magento 2 robots.txt file?

Yes, Magento 2 allows you to add custom instructions in the robots.txt file, such as restricting specific folders, pages, or URLs that aren’t necessary for indexing to improve SEO.

What is the impact of blocking the /lib/ and /var/ folders in robots.txt?

Blocking folders like /lib/ and /var/ prevents search engines from accessing system files, improving security and efficiency by focusing crawlers on relevant content.

Why should you exclude catalog search pages from indexing?

Catalog search pages often generate duplicate content, which can negatively impact SEO. Excluding them helps focus search engines on unique product and category pages.

How do URL filters affect SEO, and how can you manage them with robots.txt?

URL filters create multiple versions of a page, leading to duplicate content issues. Adding “Disallow” rules for filters in robots.txt helps keep the indexing clean and SEO-focused.

What’s the benefit of using “Disallow: /checkout/” in Magento’s robots.txt?

Using “Disallow: /checkout/” prevents search engines from indexing checkout-related pages, which aren’t useful for SEO and helps protect private user data.

Why is it important to regularly review your robots.txt file in Magento 2?

Regularly reviewing robots.txt ensures your indexing strategy remains up-to-date, aligns with any site changes, and avoids exposing sensitive or irrelevant pages to search engines.